same logs as before

this is my query plan

my code does: spark.table(table).filter(condition).select(col1, col2).write.mode(‘overwrite’).format(‘parquet’).save(path)

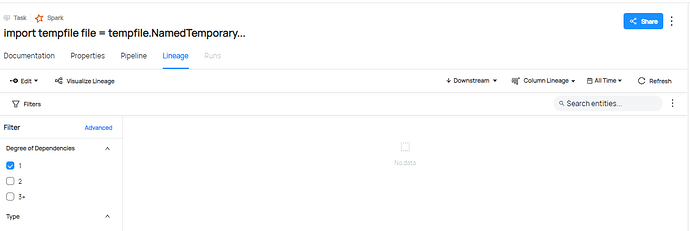

<@UV14447EU> It appeared a different task now!!! Niiicee!! But with no lineage to hive yet, do you know what it could be? the task is linked by table name? path?

Parquet appears task without Lineage, Delta does not appear task

Parquet: InsertHadoopFsRelationCommand

Delta: SaveIntoDataSourceCommand

maybe I should include platform instance? i didn’t understand what it is

Path on task appears without dbfs:/ and on hive it appears with dbfs:/ scheme on location

Update:

Lineage worked for downstream hive tables!!! I had to write it as parquet and use saveAsTable method from Spark.

I’ll try more to get it done for delta tables. Just one question, spark integration ingest upstream hive tables too? <@UV14447EU>